背景

在人工智能领域,GPU 是必不可少的。在本文中,我们将介绍如何在服务器上安装和部署 NVIDIA GPU 的 docker

升级你的CUDA

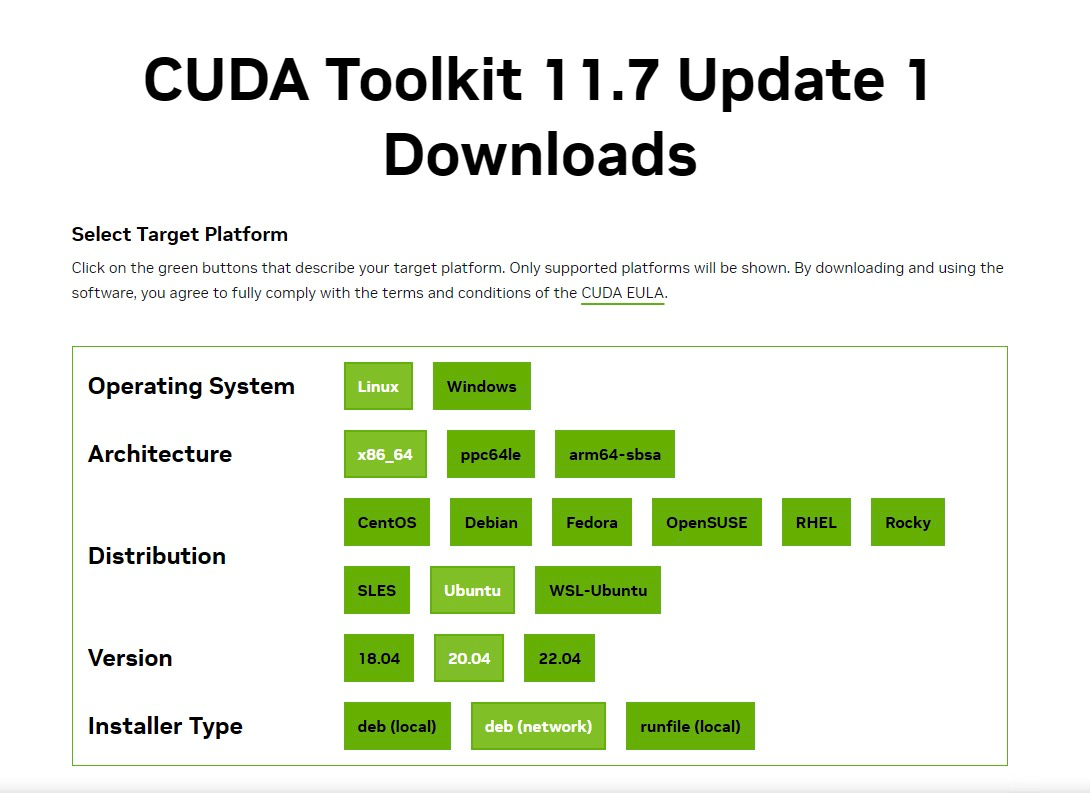

选择你的系统对应版本进行安装

安装

- 确保你的系统已经安装了NVIDIA驱动和Docker引擎。确保驱动版本与Docker引擎兼容。你还需要安装nvidia-docker2软件包,它是NVIDIA Docker的一个插件。可以在https://github.com/NVIDIA/nvidia-docker上找到安装说明

1.1 卸载旧版本的nvidia-docker(如果已安装)

1sudo apt-get remove nvidia-docker

1.2 添加nvidia-docker2仓库

1distribution=$(. /etc/os-release;echo $ID$VERSION_ID)

2curl -s -L https://nvidia.github.io/nvidia-docker/gpgkey | sudo apt-key add -

3curl -s -L https://nvidia.github.io/nvidia-docker/$distribution/nvidia-docker.list | sudo tee /etc/apt/sources.list.d/nvidia-docker.list

1.3 更新软件包列表和安装nvidia-docker2

1sudo apt-get update

2sudo apt-get install nvidia-docker2

1.4 重启Docker守护进程

1sudo systemctl restart docker

- 通过以下命令测试是否安装成功

1docker run --gpus all nvidia/cuda:10.0-base nvidia-smi

- 如果安装成功,你将看到类似下面的输出

1+-----------------------------------------------------------------------------+

2| NVIDIA-SMI 440.33.01 Driver Version: 440.33.01 CUDA Version: 10.2 |

3|-------------------------------+----------------------+----------------------+

4| GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. ECC |

5| Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. |

6|===============================+======================+======================|

7| 0 Tesla P100-PCIE... On | 00000000:00:1E.0 Off | 0 |

8| N/A 32C P0 32W / 250W | 0MiB / 16280MiB | 0% Default |

9+-------------------------------+----------------------+----------------------+

10| 1 Tesla P100-PCIE... On | 00000000:00:1F.0 Off | 0 |

11| N/A 32C P0 32W / 250W | 0MiB / 16280MiB | 0% Default |

12+-------------------------------+----------------------+----------------------+

13| 2 Tesla P100-PCIE... On | 00000000:00:20.0 Off | 0 |

14| N/A 32C P0 32W / 250W | 0MiB / 16280MiB | 0% Default |

15+-------------------------------+----------------------+----------------------+

16| 3 Tesla P100-PCIE... On | 00000000:00:21.0 Off | 0 |

17| N/A 32C P0 32W / 250W | 0MiB / 16280MiB | 0% Default |

18+-------------------------------+----------------------+----------------------+

19| 4 Tesla P100-PCIE... On | 00000000:00:22.0 Off | 0 |

20| N/A 32C P0 32W / 250W | 0MiB / 16280MiB | 0% Default |

21+-------------------------------+----------------------+----------------------+

22| 5 Tesla P100-PCIE... On | 00000000:00:23.0 Off | 0 |

23| N/A 32C P0 32W / 250W | 0MiB / 16280MiB | 0% Default |

24+-------------------------------+----------------------+----------------------+

25| 6 Tesla P100-PCIE... On | 00000000:00:24.0 Off | 0 |

26| N/A 32C P0 32W / 250W | 0MiB / 16280MiB | 0% Default |

27+-------------------------------+----------------------+----------------------+

28| 7 Tesla P100-PCIE... On | 00000000:00:25.0 Off | 0 |

29| N/A 32C P0 32W / 250W | 0MiB / 16280MiB | 0% Default |

30+-------------------------------+----------------------+----------------------+

部署

- 创建一个新的dockerfile

1FROM nvidia/cuda:11.7.0-cudnn8-runtime-ubuntu18.04

2

3# 安装软件包

4RUN apt-get update && apt-get install -y \

5 build-essential \

6 git \

7 wget \

8 vim \

9 openssh-server

10

11RUN mkdir -p /run/sshd

12RUN echo "root:password" | chpasswd

13RUN sed -i 's/#PermitRootLogin prohibit-password/PermitRootLogin yes/' /etc/ssh/sshd_config

14EXPOSE 22

15CMD ["/usr/sbin/sshd", "-D"]

- 创建一个新的docker-compose.yml

1version: "3"

2services:

3 cuda-container:

4 build: .

5 runtime: nvidia

6 container_name: cuda-container

7 ports:

8 - 2201:22

9 volumes:

10 - ./app:/app

- 启动docker

1docker-compose up -d

- 验证

1docker exec -ti cuda-container nvidia-smi